SCIoI‘s David Mezey‘s work on the front page of Nature Portfolio’s npj Robotics

In a sunlit robotics lab at Science of Intelligence (SCIoI) in Berlin, ten little robots are moving with quiet purpose (but not-so-quiet buzzing) across the floor. There’s no central computer telling them where to go. They don’t talk to each other. They don’t even know where they or their neighbors are located in space. And yet, they move as one. This study advances a long-term goal shared by biologists, engineers, and roboticists: developing a robotic swarm that, like a flock of birds or school of fish, is decentralized, local, and fully autonomous using only local vision.

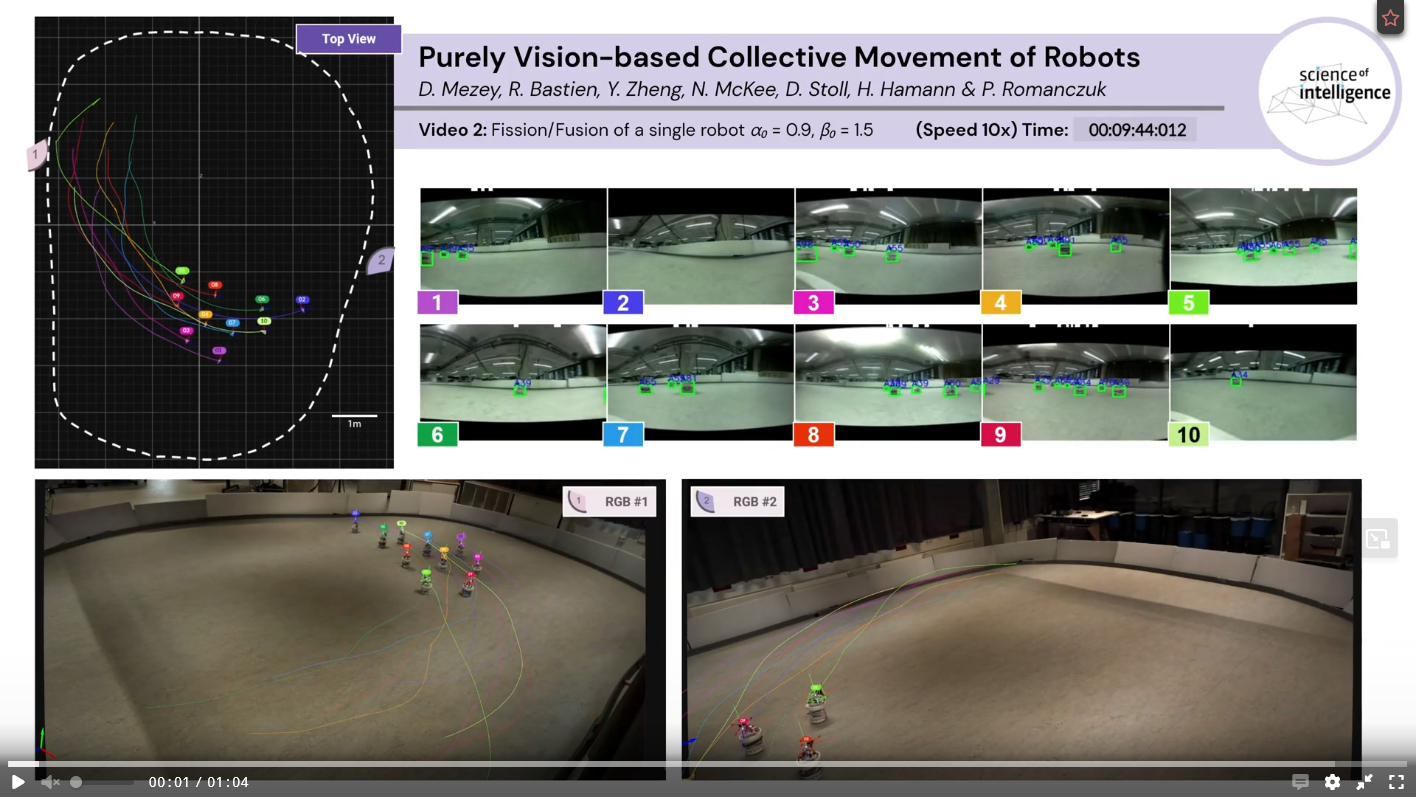

In this experimental set-up, the robots orchestrate their movement using only their “eyes” (i.e., cameras). It has recently been published under the title “Purely vision-based collective movement of robots”, led by SCIoI researcher David Mezey, along with Renaud Bastien, Yating Zheng, Neal McKee, David Stoll, Heiko Hamann and Pawel Romanczuk. The study now has been featured on the front page of Nature Portfolio’s npj Robotics, a journal at the forefront of the field.

See more? Say less!

Unlike most robotic swarms, which rely on GPS signals, central servers, or wireless communication to coordinate their movement, the method used in David’s work lets them operate solely on what they see through their individual cameras. They don’t need to exchange data. They don’t even “know” how fast or in which direction their peers or they are moving. All they need is the silhouette of another robot passing through their visual field of view. “It’s a design inspired by fish schools and bird flocks, where vision is the primary modality shaping collective motion,” as David explains. “Fish don’t have GPS, and birds can’t send their exact positions to each other either. They perceive the world, and they respond to it. That’s exactly what we wanted our robots to do, too.”

Using a purely vision-based model first proposed in theoretical biology by some of the team members, David and his team developed a minimalist algorithm that turns camera frames into simple motion control commands. No maps of the environment, no memory, no orientation or position detection, no distance estimation, no tags or markers—just raw visual input, processed onboard, and turned into physical movement. As a result, when one robot sees another, it adjusts its own speed and direction reflexively.

The result is a cohesive, smooth group motion, complete with turns, regrouping events, and formations similar to real animal collectives.

A change of direction in swarm design

For years, scientists have imagined teams of small, autonomous robots helping out in places where humans can’t easily go, supporting environmental research, aiding in search-and-rescue missions, or assisting in the exploration of remote or dangerous terrain. But most existing robot swarms rely on infrastructures that can easily become fragile: they depend on constant communication, centralized coordination, or a steady stream of external data. These dependencies can be a great deal of liability, especially in unpredictable environments. A broken link, a blocked signal, or missing information can bring entire systems to a halt.

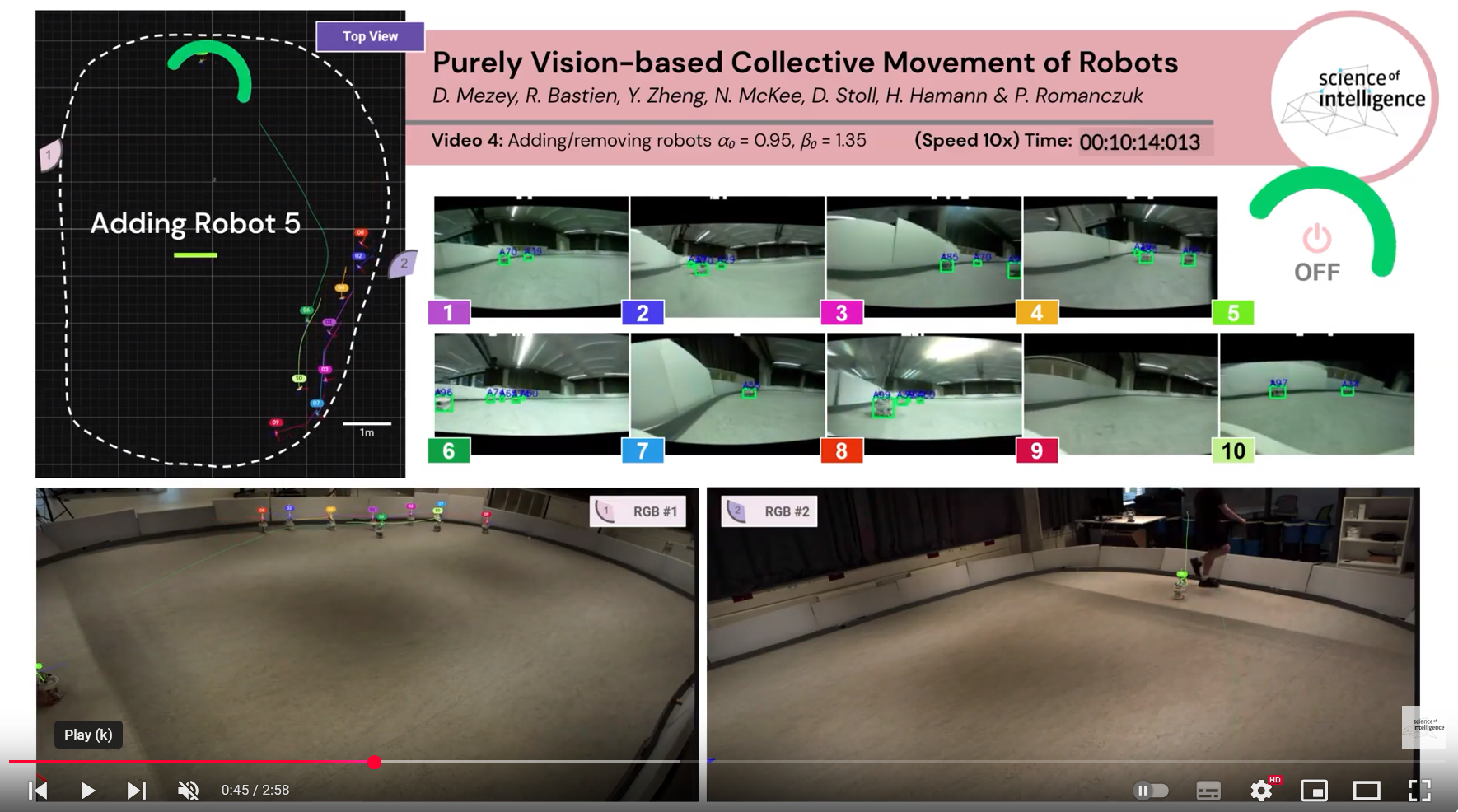

David’s approach offers an alternative: “By removing communication and central control, we remove single points of failure. And that makes the whole system more resilient. This is similar to how overcoming the challenges of orchestrating movement using local information only, animal collectives become extremely robust to perturbations.”

This resilience coming from decentralized, vision-based control, was tested across more than 30 hours of real-world experiments in a large, enclosed arena. Despite the limitations of a camera with only a 175-degree view (meaning the robots have large “blind spots”) the swarm adapted, flowed, and recovered from occasional fragmentation. If one robot lost sight of the group, it could find its way back later on using only its camera feed.

This behavior, known as “fission-fusion,” is common in natural animal groups but has rarely been achieved in robots, especially without communication or memory: