Active interconnections exploit the complementarity and synergy of information produced by the different components, as illustrated in Figure 10b. Active interconnections can broker and adapt this information between the components, depending on the situation and the needs of each component (Figure 10c). In this design pattern, the behavior of the entire system can respond to novel environmental circumstances by exploiting the rich combinatorics of adjusting the information flow between the components. As a result, active interconnections are an important building block in the production of behavioral diversity in robust intelligent systems.

Empirical support: Strong support for active interconnections comes from the study of biological systems, even though the principle was never articulated explicitly in these works. Research in biology and medicine showed that many biological systems exhibit active interconnections, even at different levels of abstraction. For example, active interconnections exist at the genetic level (alternative splicing) (Leff et al., 1986), at the cellular level (adaptive communication channels between cells) (Vaney and Weiler, 2000), and at the tissue levels (organ transplantation) (Blackiston and Levin, 2013). Common in all these examples, behavioral flexibility and the ability to respond robustly to novel situations is realized via active interconnections.

A rudimentary implementation of this principle produced an award-winning robotic system, described in an award-winning publication (Eppner et al., 2018). Twenty-six international teams from industry and academia participated in the inaugural Amazon Picking Challenge. Our team won the challenge with almost twice as many points as the second-place team from Massachusetts Institute of Technology. A key contributor to this success was the robustness of the system, achieved with active interconnections. This principle also plays a key role in interactive perception (Bohg et al., 2017; Mengers et al., 2023). Our examples show that active interconnections, taken as a design pattern, produce robust and versatile behavior in artificial systems.

The principle of active interconnections has also proven to be a powerful modeling tool for biological systems. For example, we produced a very simple active-interconnections based model of human perception for several visual illusions (Battaje et al., 2024). This model was fitted to experimental data from human subjects and generalized to unseen parameter ranges and to additional aspects of perception that were not explicitly modeled. Surprisingly, an analysis of the model even makes correct predictions about previously unknown properties of the perceptual process. These predictions were shown to be correct in psychophysical experiments with humans. Active interconnections are the dominant feature in the class of models we produced. Their expressive and predictive power provides support for active interconnections as an important principle acting in intelligent systems.

We applied the principle of active interconnections to model the interaction between object-based attention and action planning, for example, planning eye saccades. Based on the principle, we discovered that the time spent in functional foveation categories (object detection, object inspection, object revisits) depends on the interaction of at least one top-down (object segmentation maps quantifying object regions) and two bottom-up processes (inhibition of return quantifying saccade history, and saliency maps quantifying bottom-up feature content) (Roth et al., 2023, 2024). We extended this work to investigate object-based visual selection and gaze-dependent object segmentation as two active, interconnected processes. By leveraging the models ability to model uncertainties, we were able to propose parsimonious alternatives to the empirically postulated “inhibition of return” mechanism (Mengers et al., 2024b).

Active interconnections also play a crucial role in collective intelligence (Tump et al., 2023; Raoufi et al., 2021, 2023). We showed that the key enablers of collective intelligence lie 1) in the interactions between cognitive parameters at the individual level, and 2) how cognitive parameters interacted between agents at the collective level. Regarding 1), when and which decisions individuals make is shaped by the interplay of different cognitive parameters in our modeling approach (i.e., the drift diffusion modeling). The combination of the starting point, drift rate, and threshold, shapes how and when individuals decide, and only by studying their interactions can we understand (human) intelligent decisions. Regarding 2) and moving to the collective level, the subsequent synchronization of cognitive parameters between individuals in collectives, plays a key role in whether collectives show intelligence (or stupidity).

Furthermore, we also applied the principle explicitly to modeling the dynamics in collective decision-making processes. The increased expressiveness of the model, relative to models that had been considered previously, led to a more realistic understanding of how collectives form opinions (Mengers et al., 2024a).

The fact that a single principle can serve as a model language for such a diverse set of intelligent behaviors suggests that it captures a regularity capable of generating behavior in a variety of settings (compare the required properties of a principle). Active interconnections are “a general scientific […] law that has numerous special applications across a wide field,” describing “a fundamental quality determining the nature of something” (mechanistic principles).

Multiple computational paradigms

Principle: The principle of multiple computational paradigms states that the robustness and versatility of intelligent behavior results—at least in part—from the synergistic application of multiple computational paradigms.

Explanation: We view intelligence as a form of computation (Brock, 2024). Computation can be realized in different paradigms, e.g., neural, digital, mechanical, or quantum. Each of these paradigms has advantages over others, depending on the type of problem to be solved. By combining multiple computational paradigms, sub-problems can be addressed using the most suitable paradigm. The literature often talks about embodiment in this context (Varela et al., 1991). We have argued that embodiment is not the most appropriate term. Rather, the use of multiple computational paradigms is the result of embodiment (Brock, 2024).

In this view, a biological or artificial agent performs computation to generate intelligent behavior. The computation is composed of subroutines implemented in different computational paradigms Brock, 2024). For example, humans perform neural computation in their nervous systems but also mechanical computation with their bodies. We will present evidence from dexterous manipulation below, but we use this example for illustration here, too. When grasping an object, humans perform neural computation to identify the object, to trigger movements that bring the hand close to it. However, the specific locations of each finger on the to-be-grasped object are not explicitly computed. Humans employ the mechanical computation performed by a compliant, soft-material hand: the fingers land as they may. Neural computation has the task of bringing the hand into position such that the mechanical computation of the hand can take over the determination of the final grasp pose. Each computational paradigm solves the sub-problem at which it is good. The result is highly robust and adaptable behavior.

Empirical support: For this principle, we will emphasize a single case of evidence focusing on in-hand manipulation. We want to demonstrate how the principle of multiple computational paradigms, when applied correctly, leads to solutions that differ fundamentally from the engineered solutions we know from robotics and AI today. However, the use of mechanical computation in addition to digital/neural computation (and thus the application of the principle) was the first widely accepted principle and we have dozens of results that support it.

Figure 11: Robotics and Biology Laboratory TU Berlin Hand 3 performing in-hand manipulation

In-hand manipulation (Figure 11) has become one of the benchmark problems for current deep-learning based approaches (Handa et al., 2023). These approaches leverage a single computational paradigm (digital computation). Here, we show the disruptive impact of actively considering mechanical computation as a second paradigm. But we have also shown that the same principle applies to human grasping (Puhlmann et al., 2016).

We developed the Robotics and Biology Laboratory TU Berlin Hand 3 as a robot platform for manipulation (Puhlmann et al., 2016). It is designed to provide mechanical computation in support of dexterous manipulation. In an extensive empirical study, we demonstrated that this mechanical computation contributes significantly to the robustness of dexterous manipulation behavior (Bhatt et al., 2021). To fully exploit these advantages, we must adapt all components of the system accordingly (YouTube video). In effect, we must identify the sub-problems that remain to be solved after the mechanical computation of the hand has done its job. We have devised a self-teaching approach to learning dexterous manipulation skills that autonomously improves its own capabilities during real-world experience, starting with a single demonstration of the desired skill (Sieler and Brock, 2023). The result is an extremely robust and versatile framework for acquiring, incrementally improving, and composing manipulation skills.

The contrast between the deep-learning based approaches (e.g., Handa et al., 2023) and our solution based on the principle of multiple computational paradigms could not be larger. The principle-based solution is more robust and more general than the deep-learning based approaches (Patidar et al., 2023). Our approach relies on very simple computations, compared to the hundreds of years of simulated experience in a high-fidelity physics simulator required for the learning approach—not to mention the sizable energy consumption attached to it. This contrast demonstrates how exploiting the principles of intelligence can close the performance gap between biological and artificial systems, thereby making the desirable properties of biological systems (generality, robustness, energy-efficiency), available to engineering.

Agent-environment computation

Principle: The principle of agent-environment computation states that the generation of intelligent behavior leverages a flexible coupling of the agent to the environment. Through this coupling, the agent can create novel and task-related regularities. These, in turn, are exploited during the generation of intelligent behavior.

Explanation: Intelligent behavior adapts to environmental variations and is robust to noise. A key mechanistic ingredient for accomplishing this are regularities, i.e., coupling of action and sensing parameters that produce useful and robust action/perception couplings. These regularities can be seen as a lower-dimensional manifold embedded in the combined state space of the world and the intelligent agent. The existence of such regularities is a proven necessity (Wolpert and Macready, 1997), otherwise intelligence would most likely not exist.

Given the central role of regularities in generating intelligent behavior, it becomes clear that the ability to produce novel regularities contributes importantly to intelligence. Some regularities exist in the world, without the agent’s contribution. Examples of this include Newton’s laws of motion, the visual appearance of object boundaries as edges, or the fundamental laws of logic. But others can be created by the agent, for example, by tying a rope around a pole to draw a circle into the sand. Creating and exploiting such regularities contributes to intelligent behavior.

Empirical support: The important role of the environment in generating intelligent behavior is well-established and empirically supported by many prior works. But the degree to which agent-environment computation is central to intelligence has only recently received significant attention. This distributed computation enables robust, intelligent behavior (Triesch et al., 2003; Clark, 2011; Murphy Paul, 2022) and this is closely related to the embodiment hypothesis from cognitive science, which states “that intelligence emerges in the interaction of an agent with an environment and as a result of sensorimotor activity” (Smith and Gasser, 2005). This is not only true for physical interactions with the environment but also for perception, where these regularities are often called sensorimotor contingencies (O’Regan and Noë, 2001; Noë, 2004).

The coupling of action and perception offers opportunities for exploiting regularities. We have shown that artificial agents, by fixating on features of the environment, can robustly and efficiently estimate distances and divide the scene into two parts, one close and one further away (Battaje and Brock, 2022). In humans, we showed that they learn relationships between motor acts (such as saccades, pursuit, or even drift movements) and drastic sensory changes, such as large-field displacements of the entire visual image across the retina (in vision), or increased stretch in the extraocular muscles (in proprioception). We created a computational framework based on the notion that establishing a sensorimotor contingency (Rolfs and Schweitzer, 2022) can solve the problem of visual stability (Schweitzer et al., 2023).

Motivated by human manipulation (Puhlmann et al., 2016), we developed a novel approach to programming robots via human demonstrations (Li and Brock, 2022; Li et al., 2023)

. It exploits a regularity called environmental constraints (Eppner et al., 2015) to solve complex manipulation problems from a single demonstration. The resulting policies are robust and generalize across objects and environmental contexts. When applied to a recent benchmark that cannot be solved by existing deep-learning based learning-from-demonstration approaches using 1000 demonstrations (Heo et al., 2023; Luo et al., 2024), our approach solved the benchmark robustly from a single demonstration. This demonstrates how the principles of intelligence contribute to closing the performance gap between biological and artificial intelligence. It also demonstrates the value of pursuing alternative approaches to Artificial Intelligence.

Following the theme of closing the performance gap between biological and artificial agents, we demonstrated that in robot manipulation tasks—such as putting objects into drawers or cabinets requires the drawer to be opened first—it is not necessary to maintain detailed knowledge about the world’s state to support planning. Instead, it is under most circumstances sufficient to use the environment as the representation of its own state and to apply reactive control (Baum and Brock, 2022). In this example, most of the cognitive/computational effort is avoided by “outsourcing” functionality to the environment.

The importance of implementing the structure of the environment in Artificial Intelligence systems is further illustrated by a project on decision-making in 3D sequential manipulation. The research shows that representations reflecting the 3D physical structure of our environment are much more successful in guiding decisions than generic deep neural networks (Zhou et al., 2023).

Finally, evidence for the principle of agent-environment computation stems from research on collective foraging. Perceptual constraints and the embodiment of agents naturally limits the amounts of social information available to the individuals, and thereby prevents the over usage of social information with negative effects on collective performance (Mezey et al., 2024).

Adaptive representations

Principle: The principle of adaptive representations states that behavioral flexibility is facilitated by adjusting the representations of world state to the agent’s environment, task, and goal. Adaptive representations ensure that a system represents aspects of the world that are most relevant to behavior in a way that is most conducive to generating that behavior.

Explanation: Sensations of the world and actions operating on the world are affected by many factors, e.g., objects, lighting, or the agent’s posture. Perfect knowledge of these processes could, in theory, lead to a disentangled representation (Locatello et al., 2018), i.e., a representation whose components are fully independent of each other. This idealized situation cannot be achieved, since information about the world is always uncertain and partial. As a result, the representations of an intelligent agent are always approximations to ideal representations. Given a particular task, one can identify a “good” representation for which the relevant factors are more disentangled than in others. Since such “good” representations will depend on the task, an intelligent agent must possess adaptive representations.

On a mechanistic level, representational adaptation can be realized by adjusting the weighting of features (Memelink and Hommel, 2012). For example, when grasping a cup, grasp-relevant features such as shape are more strongly weighted than grasp-irrelevant features such as color. The differential weighting of information is related to an altered information flow between components in the system (see active interconnections). While adaptive representations are a crucial property of individual and social intelligence, how much explanatory power they hold for collective intelligence is still an open question.

Empirical support: Adaptive representations play a crucial role in the flexible adjustment to different tasks. In a project on task adjustments, we could demonstrate, using brain decoding, that the same task structure is represented differently in different brain regions and that these representations of task structure change in different ways after a task is completed.

On the social level, by decoding of Electroencephalography (EEG) signals, we demonstrated that participants represent the anticipated behavior of another agent differently depending on whether they act together with this agent or alone (Formica and Brass, 2024).

Another example of adaptive representations shows that participants adjust their representation of a facial expression depending on the social context: a neutral face was represented as smiling when it occurred in a positive social context and with a more negative emotional expression in the negative social context (Maier et al., 2022).

We have been able to show that the processing of social-emotional signals is flexibly tuned to the attributes, abilities and states of mind of individual social agents (Eiserbeck et al., 2023), revealing adapted co-representations of social agents in humans and robots (Wudarczyk et al., 2021; Kirtay et al., 2020). Based on these findings, a framework for creating computational models of co-representation in robots to predict and simulate the actions of social communication partners, has now been developed.

We demonstrated that adaptive representations play a crucial role in a teaching context where the way a robotic tutor was represented, strongly affected the level of learning from this tutor.

Multimodality

Principle: The principle of multimodality states that processing information through multiple sensory channels is advantageous when operating in an uncertain and non-stationary world. The benefit of multimodality is that each modality captures overlapping, non-redundant, synergistic information about a particular phenomenon in the world.

Explanation: In multimodal information processing, modality-specific features are fused to create a unified representation. This redundancy helps compensate incomplete or noisy information in a single modality. Multimodal systems exploit contingent interaction of information processing in different modalities. Seeing the location of a loudspeaker, for example, can alter the spatial localization of a sound source. Which modality-specific features enter this multimodal representation depends on a selection process that takes into account the reliability and relevance of modality-specific features (Ernst and Bülthoff, 2004). The multimodal representation in turn affects modality-specific information processing, leading to an interactive processing loop. Multimodality can be understood as a special case of adaptive representations and is the result of active interconnections.

Empirical support: We have extensively explored multimodality in different forms of intelligence. In the context of robots we can show, for example, that integrating vision and sound helps to estimate drawer motion by compensating for visual occlusions with acoustic information (Baum et al., 2023; Mengers et al., 2023). Furthermore, we demonstrated that learning-based systems can integrate information from perceptual modalities to predict successful decisions on a logic level (Driess et al., 2023).

Interestingly, integrating different sensory modalities also affects collective behavior. While in a dense group, an individual can only see its nearest neighbors, but auditory information can be perceived for even very distant group members. In preliminary work, we have investigated the interaction of vision and sound in group contagion.

Further evidence for the role of multimodal information processing in collective behavior was provided by the observation that a combination of different modalities (vision and sound) simulating a predator cue elicits deeper and longer collective dives in fish (Lukas et al., 2021).

Finally, we investigated how non-verbal immediacy (a construct capturing multimodal non-verbal behaviors) affected learning in a teaching context. The results demonstrated that non-verbal immediacy increased motivation but did not facilitate learning (Frenkel et al., 2024).

Multi-timescale computation

Principle: Adaptability to environmental change is a hallmark of intelligence. A mechanistic prerequisite for such adaptation is the presence of multiple time scales of computation to detect, track, and act upon changes in the environment. Changes occur across timescales, and so, the timescales of computation must match them.

Explanation: In the simplest case, at least two timescales—a fast and a slow one—must be present. The sampling frequency of the fast timescale should be significantly higher than the environmental rate of change. Integration of the environmental dynamics then provides (quasi-stationary) statistics on the environmental signal. It allows the detection of changes and adaptation to them on a slower timescale specific to the ecological niche. The slower time scale should be slow enough to filter out random variations, but also fast enough, i.e., in the order of the relevant rate of change of environment, to allow for successful adaptation.

Due to the fundamental nature of this principle, it is a necessary pre-condition for intelligent behavior in non-stationary environments with abundant examples in biology. They include the fast scale of individual neuronal firing and the slow time scale of spike-time dependent synaptic plasticity (Caporale and Dan, 2008), or the presence of a whole hierarchy of time scales for information processing in the brain (Kiebel et al., 2008), and in animal behavior (McCann et al., 2017; Bialek and Shaevitz, 2024). It has also been identified as a core challenge for the reinforcement learning of artificial agents in non-stationary environments (Padakandla, 2021).

Empirical support: We found empirical support for this principle across different systems. Examples include active vision where information acquired during fast saccadic eye movements shapes perceptual processes on longer time scales (Rolfs and Schweitzer, 2022), including object continuity (Schweitzer and Rolfs, 2021) or motion perception (Rolfs et al., 2023).

Similarly, models of eye movement control in natural, dynamic scenes showed the highest similarity to human scan paths when including multiple time scales for selecting target objects and subsequently inhibiting them (Roth et al., 2023).

Another example is the presence of vastly different time scales in collective predator evasion, a fast (individual) diving response inducing a collective escape wave, and long lasting collective excitation with repeated wave activity (Gomez-Nava et al., 2023; Doran et al., 2022).

In exploration and social interaction during collective exploitation, multiple timescales ensure precise collective decisions in swarming robots (Raoufi et al., 2021, 2023).

Incremental assembly of capabilities

Principle: The principle of the incremental assembly of capabilities describes the ability of intelligent agents to efficiently find multifaceted solutions to complex tasks with very high-dimensional solution spaces via the incremental assembly of solutions to simpler, low-dimensional problems in an iterative way.

Explanation: The best way to solve a complex problem in a high-dimensional space is first to solve simpler problems in lower-dimensional spaces and to put the solutions together appropriately. If you have discovered an obvious regularity relevant to a task, you can effectively replace the concerned dimensions with your knowledge of this regularity. As a result, the problem space is now simpler because some dimensions are already explained. In this simplified space, a new regularity might be within the reach of the learning ability of the agent: the agent discovers this new regularity, can use it in the future, and has further reduced the dimensionality of the remaining space. More and more regularities move within reach of the agent. Each of the regularities can be viewed as a factor in the generation of behavior. The agent composes and re-composes these factors to achieve behavior (Li et al., 2024). Simple real-world examples of this principle at work are: (1) Children learn mathematics at school incrementally: first addition, then multiplication, division, and so on; and (2) children learn motor control for their limbs proximal to distal, and coarse to fine, increasing the dimensionality only when the first degrees of freedom are mastered (Baillargeon, 2002).

Empirical support: SCIoI 1 research on the novel variants of federated learning algorithms showcases the principle at work. Instead of a central processing unit fitting a model from a vast set of data (i.e., solving a large optimization problem), federated learning splits the overall problem into several smaller sub-problems. Subsequently, these are combined to provide a solution for the overall problem. In an iterative fashion, local computational agents fit local models from (disjointed) subsets of the entire dataset, send model parameters to a central unit, which assembles an updated overall model, transmitting the latter back to the local agents. Dramatic improvements (in terms of both efficiency and privacy) are possible by recognizing that the central unit does not need access to the local parameter vectors (Öksüz et al., 2023, 2024). Instead, a weighted sum of those vectors (plus rudimentary information on the weights) turns out to be sufficient. If parameter vectors are transmitted simultaneously via a wireless channel, superposition will provide precisely this.

Adaptation of structure

Principle: The principle of the adaptation of structure states that an intelligent system, to respond to long-term changes in its ecological niche, may change its components to adapt their generalization to the novel conditions. Such changes include removal or addition of components, but also the re-factoring of existing components.

Explanation: The principle of active interconnections take the components as given and leverage active interconnections for good generalization. In contrast, the adaptation of structure re-distributes the capabilities among the system’s components to improve generalization. We hypothesize that active interconnections enable generalization for structurally similar environmental variations and on ontogenetic time scales. In contrast, adaptation of structure enables generalization for substantial deviations of environmental structure. Also, the time scales for those changes can be longer, sometimes happening ontogenetically, sometimes over generations (cultural evolution) but sometimes also genetically. As an analogy from linear algebra: Whereas active interconnections combine existing basis vectors in useful ways, adaptation of structure corresponds to changing the basis vectors themselves.

The re-distribution of capabilities across components (re-factoring) changes the regularities that can be encoded and exploited within the system. Intelligent behavior is facilitated if this change represents a move toward the regularities relevant in the current ecological niche.

Empirical support: In cognitive neuroscience, a very similar phenomenon has been termed “neuronal recycling” (Dehaene and Cohen, 2007). It refers to using a specific neural structure for a new cognitive operation (e.g., re-using parts of the visual cortex involved in object recognition for reading).

Circumstantial support comes from system building in robotics, where the adaptation of structure, unsurprisingly, leads to systems with different capabilities and suited to different tasks (ecological niches) (Eppner et al., 2018).

The adaptation of structure is one possible explanation for exaptations, a phenomenon considered by some to be fundamental to the origin of cognitive abilities .

Other scientific achievements of SCIoI

Insights that may lead to paradigm shifts

To illustrate the highly interdisciplinary nature of SCIoI and how it leads to scientific progress, we will present a selection of insights and discoveries by SCIoI and our new PIs. These discoveries may accelerate intelligence research by questioning and replacing established assumptions.

Focusing on group-level variation to study intelligence: “This is the original sin of the cognitive sciences—the denial of variation and diversity in human cognition” (Levinson, 2012). Cognitive scientists often implicitly idealize intelligence as a species-specific stable system, invariable in its basic functions, responding flexibly to situational factors (Fodor, 1983). Consequently, if the situation is controlled (using standardized experiments, for example), the basic structure of a species’s intelligence can be accessed. Given these assumptions, a rather small sample of individuals is sufficient to inform general theory. The result is the ongoing practice that almost all studies on human intelligence rely on, convenient samples from countries that only cover a small subsection of human diversity (Blasi et al., 2022; Henrich et al., 2010; Nielsen et al., 2017). Consequently, variation is often dismissed as noise around the signal. However, we believe this “noise” is key to understanding the principles of intelligence.

We utilize variation across populations and individuals therein, in humans and other animals, aimed at turning theoretical and ideological assumptions about a universal human mind into an empirical program. We showed that human spatial intelligence is not universal, but varies systematically across human groups and is largely structured by culture-specific learning that overrides universal heritable predispositions (Haun et al., 2006, 2011b; Haun and Rapold, 2009). In contrast, we showed that children from 17 diverse communities around the world process another’s gaze in universal ways, irrespective of the absolute performance differences between groups (Bohn et al., 2024). We formulated a universal process underlying a foundational socio-cognitive ability in humans, despite cultural variations in behavior. Finally, we extended this approach to the study of chimpanzee intelligence. We showed that chimpanzees’ social behavior (Van Leeuwen et al., 2018), social tolerance (DeTroy et al., 2021), and prosociality (Van Leeuwen et al., 2021) vary systematically across groups, challenging traditional assumptions about species-level differences. In contrast, the structure of chimpanzee social relations can be predicted universally by a model extracted from human social networks, identifying universal features of primate social intelligence (Escribano et al., 2022).

Taken together, our results demonstrate that the joint study of inter-individual and inter-cultural variation is key to understanding principles of intelligence. Variation across intelligent systems allows for the assessment of the variance in its structural features. This shift in perspective eliminates unsustainable assumptions about the invariability of intelligence and instead proposes an empirical program that centralizes the study of systematic variability across individuals, groups, and species (Liebal and Haun, 2018; Nielsen and Haun, 2016).

Addressing the modeling crisis in cognitive science: “The conceptual framework guiding most work in comparative animal cognition (whether by ethologists or psychologists) is insufficiently formalized to support rigorous science in the long run” (Allen, 2014).

Inferring models of cognition and intelligence from observations of behavior remains poorly understood. At the core of the problem lies the fact that any model, sufficiently complex, can model any behavior. This has been labeled the many-to-one mapping problem, i.e., the insight that behaviors can be produced by many different cognitive processes, and that for each of these processes many possible models exist (Taylor et al., 2022). It is mathematically implausible to collect sufficient behavioral data to identify from this “ocean” of possible models the specific model that corresponds to the mechanistic implementation in the target system. This is a problem we face in SCIoI every day.

Over the past five years, we have recognized this fundamental problem during our own experiments on animal cognition. We have begun to develop approaches to experimentation that address the modeling challenge (Baum et al., 2024; Brock, 2024). Recognizing that the information contained in behavioral observations does no suffice for model selection, we are turning model selection into an incremental process that accumulates discriminatory information across different studies. This approach to model inference dovetails perfectly with our synthetic approach to intelligence research and with the fact that mechanistic principles can describe aspects of the model without specifying the model in its entirety. Our approach to modeling has been applied successfully in various SCIoI studies. Given SCIoI‘s unique composition of disciplinary expertise, we are uniquely positioned to develop our approach into a formalization of comparative cognition research that can indeed “support rigorous science in the long run.”

Self-organized criticality and distributed computation: It has been hypothesized that intelligent systems, including animal groups exhibiting collective intelligence, should self-organize toward special regions of their parameter space, so-called critical points, where the systems dynamical behavior undergoes a qualitative change (phase transition) (Mora and Bialek, 2011). In these points of the parameter space, some aspects of distributed computation become optimal. While there is considerable theoretical support for the criticality hypothesis, empirical support, in particular outside neuronal dynamics (Wilting and Priesemann, 2019), remains limited.

SCIoI delivered strong empirical evidence that two very different biological systems operate at a critical point: 1) giant fish shoals under high risk of predation (Gomez-Nava et al., 2023), and 2) Placozoa (Trichoplax adhaerens), the simplest multicellular animal that faces the challenge of coordinating their movement and responding to environmental stimuli without having a central nervous system (Davidescu et al., 2023). We also found conflicting evidence that fish shoals under laboratory conditions do not operate at a critical point but rather in a so-called sub-critical regime, which is adaptive in safe environments by not spreading false alarms (Poel et al., 2022). However, these fish shoals shift toward critical points if their risk perception increases. We also showed that density modulation is the main mechanism for self-tuning toward (or away) from critical points in these systems. In addition, by using agent-based predator-prey models, we provided a completely new perspective on the criticality hypothesis (Klamser and Romanczuk, 2021). We demonstrated that the optimal response at criticality is not due to optimal information transfer as generally assumed but due to dynamical group structure. We also showed, contrary to previous results, that the critical point is maximally evolutionarily unstable, raising fundamental questions about adaptation mechanisms.

Our results suggest that for biological intelligent systems the optimum is not to be at criticality at all times, but rather to flexibly tune the distance to critical points based on the environmental context (Romanczuk and Daniels, 2023). This provides a novel perspective on the “criticality hypothesis,” suggesting new hypotheses with potentially transformative power.

Figure 12: Haptic device used in decision making experiments to vary physical cost

Operationalizations of cognitive cost: Decision making under risk (Kahneman, 1979) is an important manifestation of intelligence. Empirical evidence suggests that, similarly during physical work, humans minimize cognitive effort, the so-called “law of less work” (Kool et al., 2010). But in most work about decision-making the cognitive effort is equated with time-to-completion or response times. This central assumption might not be true. Doubts first arose more than two decades ago (Gilchrist et al., 2001) but there was little follow-up work. We have developed an experimental paradigm based on a haptic device (Figure 12) that allows us to vary the physical cost and the cognitive cost independently. We were able to demonstrate that an increased physical cost of actions leads to different, more effective decision-making strategies. This might indicate that we must consider physical and cognitive costs together to be able to understand human decision-making (Zenkri et al., 2024). We are currently validating this hypothesis, using our paradigm. If true, the results obtained in decision making research based solely on time-based cost measures would have to be re-evaluated. Studies considering physical and cognitive cost separately might lead to substantial progress.

Gradient-based strategies: During our research on efficient solvers for robotic manipulation planning, we found one recurring pattern: the power of differentiable models and gradient-based methods. The problems we consider are highly non-convex (with many local optima) and not simply differentiable (with a hybrid, discrete and continuous decision space). However, efficient solvers often reformulate these problems using differentiable models or learned surrogate models, so that local gradients or search steps become highly effective in finding global solutions (Toussaint et al., 2019). This engineering principle is also pervasive in modern AI, where gradient steps are effective in training highly non-linear neural functions. For example, in diffusion denoising models trained to provide gradients that generate samples from highly complex multimodal distributions (in generative models), or when pairing a decision policy with a discriminator (in Generative Adversarial Networks (GAN)), or Q-function (in Reinforcement Learning (RL)), when the second provides gradients for the first. All these are tricks to “organize gradients” for problems that are highly non-linear and non-convex.

Our work on differentiable models and methods for manipulation reasoning support the engineering principle that complex intelligent behavior can be generated by following gradients. These gradient-based approaches serve as powerful mechanistic models for the generation of intelligent behavior. Modern Artificial Intelligence has demonstrated this impressively. But our perspective on a broader level provides the ability to combine different tools and heuristics within a gradient-based framework. This will play an important role in the construction of intelligent systems.

Engineering practice for intelligent systems: We developed a novel approach to engineering systems, leading to robustness and autonomy in real-world settings (Li et al., 2024). This approach is inspired by biology, but runs counter to current engineering practice. We will continue to develop this alternative engineering practice for building intelligent systems. This approach will play an important role in SCIoI‘s research and will lead to new kinds of Artificial Intelligence systems.

An innovative infrastructure for intelligence research

Another major outcome of SCIoI is the development of novel infrastructure for intelligence research in biological and artificial systems. Close collaborations between analytic and synthetic researchers ensure that the developed infrastructure serves as an innovative foundation for intelligence research as a whole, but in particular in SCIoI.

Tracking large groups of human foragers in the wild: SCIoI developed an approach to tracking large groups of human foragers in the wild in extremely challenging conditions (-20° C, in deep snow, YouTube video). Time-synchronized tracking devices, head-mounted cameras, and heart rate provide an unprecedented amount of spatio-temporal and physiological data. To date, no study investigated human foraging dynamics on this scale. Using our approach, we discovered that for selecting their next foraging location, humans integrate information on their own foraging success, the presence of others, and landscape features. They weigh these features adaptively, for example, by relying less on others when having high foraging success.

Drone-based tracking of moving animals: We propose a novel approach for tracking schools of fish in the open ocean from drone video footage. Our framework performs classical object tracking not only in 2D, it also tracks the position and spatial expansion of the fish school by fusing the video data with the drone’s on-board sensor information like GPS coordinates and flight height. This framework allows researchers, for the first time, to study the collective behavior of fish schools in their natural social and environmental context, without invasive sensor techniques and in a scalable way. Using this approach, we discovered a new form of mutualism in group-hunting fish predators.

Figure 13: Observing mice with 3 color cameras, 2 depth cameras, infrared lighting

Computational mouse ethology: In a close collaboration of researchers from behavioral biology, computer vision, and computational modeling, we developed a system for studying mouse behavior in home-cage based tasks, using a lockbox. The system includes multiple cameras (Figure 13) and allows studying sequential decision making in freely moving mice at an unprecedented observational level of detail. We apply Bayesian inference on state/action sequences to produce a detailed analysis of the behavioral strategies of mice (Boon et al., 2024). This collaboration has also led to a new experimental setup for large semi-natural settings, in which we plan to collect behavioral data on a “big data” scale, while also increasing the richness of the data (adding Radio Frequency Identification (RFID) detections and recordings of ultrasonic vocalizations (USV)) (YouTube video). This infrastructure paves the way for the fully automated and computationally supported study of mouse behavior.

Figure 14: Mouse lockboxes: A: levers & sliders lock a hidden reward, B: interlocked black tiles block a reward

Lockboxes as an experimental paradigm: SCIoI has established so-called lockboxes (Auersperg et al., 2013; Baum et al., 2017) as an experimental paradigm across species, enabling the comparative research approach. Lockboxes represent an inverse escape room in that one has to break into a locked room rather than escape from one. This experimental paradigm can be scaled in complexity to challenge animals in situations of high complexity, which is required due to the weak decomposability of intelligent agents. We have used lockboxes with robots ( YouTube video, YouTube video) (Baum et al., 2017; Li et al., 2024), humans (YouTube video) (Zenkri et al., 2024), birds (YouTube video, YouTube video) (Baum et al., 2024), and mice (Figure 14, YouTube video) (Boon et al., 2024). Using similar experimental paradigms across species and scientific questions provides additional insights about intelligence that could be difficult to obtain otherwise.

Soft robotic hands: SCIoI has developed a novel type of robotic hand (Puhlmann et al., 2022), the Robotics and Biology Laboratory TU Berlin Hand 3, comprised of compliant materials (Figures 11). It supports forceful, safe interactions with the environment (principle of agent-environment computation) and leverages mechanical computation (principle of multiple computational paradigms). This robot hand facilitated the development of a novel, robust, and simple approach to robotic manipulation (Bhatt et al., 2021; Sieler and Brock, 2023). It outperforms systems based on deep-learning (Patidar et al., 2023), illustrating how the principles of SCIoI lead to Artificial Intelligence technology that is robust, energy-efficient, and does not depend on vast amounts of data (YouTube video).

Acoustic morphological sensing: We have developed a novel sensing technology, particularly suited to the robotic hands made from soft materials. This technology uses sound waves to turn the entire object’s morphology (shape and material composition) into a distributed sensor (Wall et al., 2023), simply by integrating a tiny cellphone loudspeaker and microphone. Using this technology, it becomes unnecessary to predetermine sensor types and placements. Using simple machine learning and little data, we can reconstruct physical aspects of the sensorized body, including, for example, shape (proprioception), contact, contact forces and moments, contacted material, or temperature. Using this technology, we were able to read braille with a finger of the Robotics and Biology Laboratory TU Berlin Hand 3 (Wall and Brock, 2022).

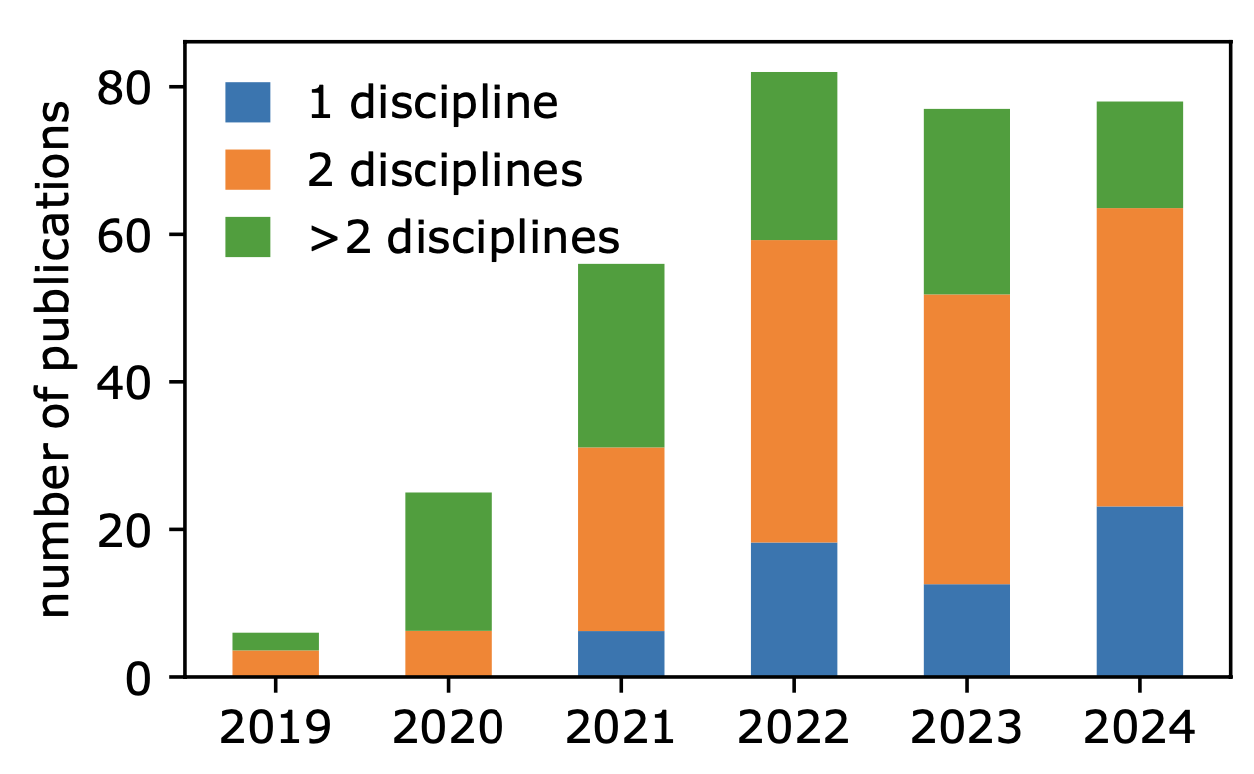

Figure 8: Till August 2024, SCIoI has produced 339 publications, 81 % connect two or more disciplines.

Figure 8: Till August 2024, SCIoI has produced 339 publications, 81 % connect two or more disciplines.

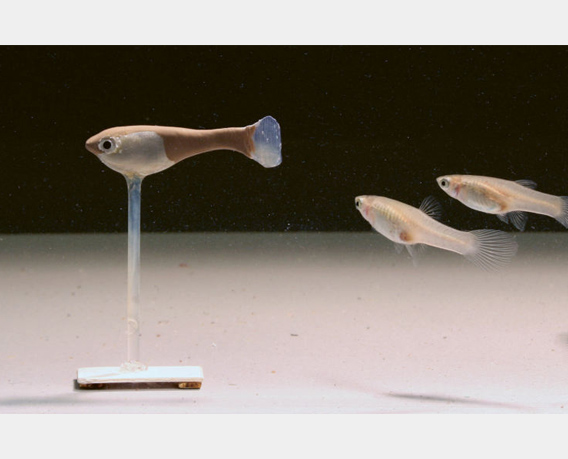

Figure 15: Live fish accept the robofish as a conspecific and adjust their behavior to it.

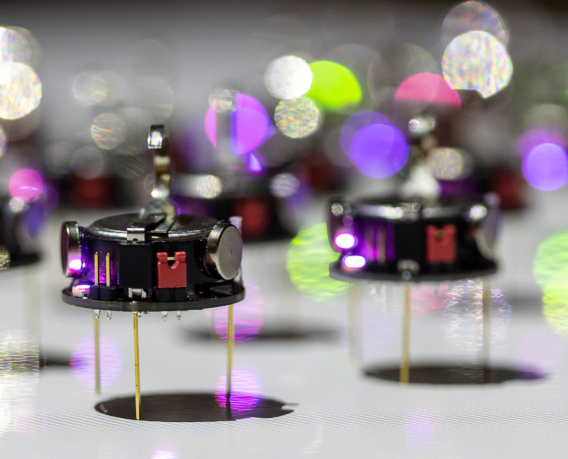

Figure 15: Live fish accept the robofish as a conspecific and adjust their behavior to it. Figure 16: Swarm robotic setup with kilobots enables experiments with large collectives

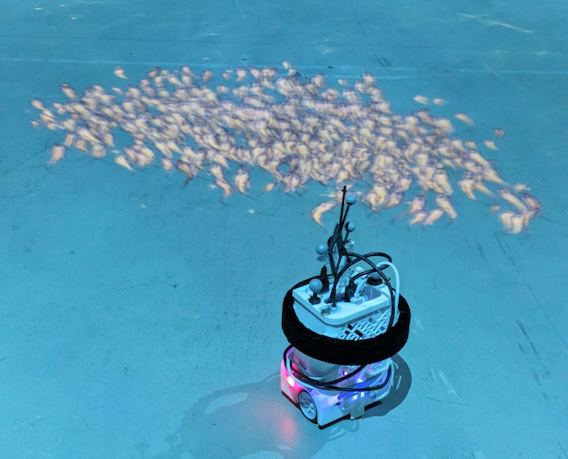

Figure 16: Swarm robotic setup with kilobots enables experiments with large collectives Figure 17: Thymio II interacting with a virtual fish swarm projected by the CoBe system.

Figure 17: Thymio II interacting with a virtual fish swarm projected by the CoBe system.