When machines learn to see differently, and artists start watching

What if the future of seeing doesn’t lie in seeing more, but in seeing differently: How a robot lab, a media artist, and a novel camera technology joined forces to offer a new perspective for our understanding of vision itself.

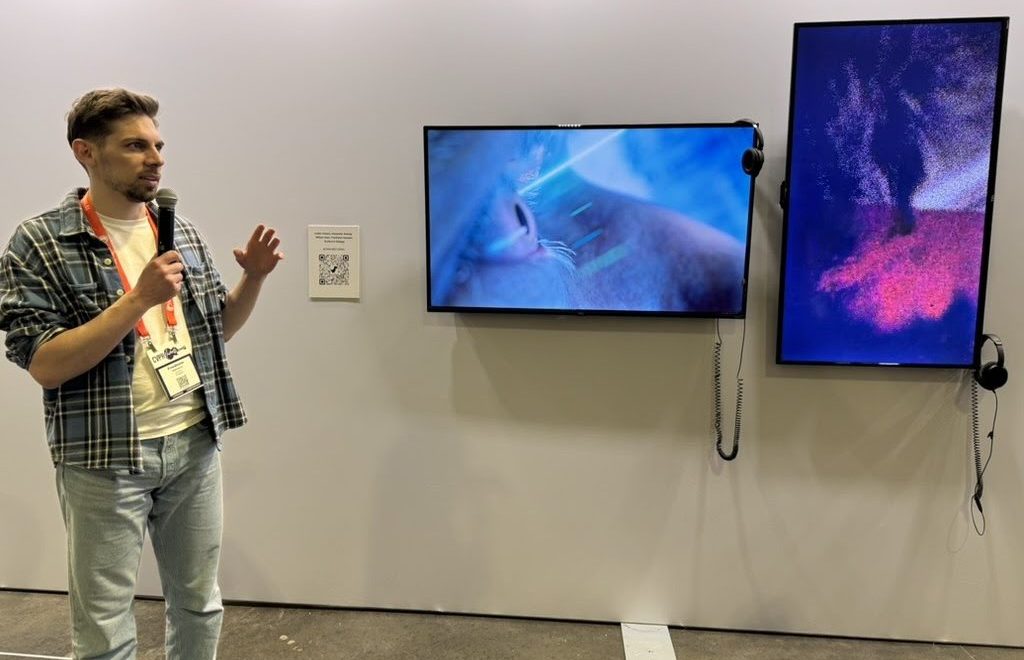

In a dimly lit room in the Galerie Stadt Sindelfingen, Germany, a figure flickers in and out of focus. Not in the way shadows do, but as if the visual system itself is glitching, registering only what moves, what shifts, what changes. The video installation is part of BLINDHÆD, the first major solo exhibition by artist Justin Urbach. But behind the futuristic red-tinged visuals lies something unexpected: cutting-edge research on computer vision from Science of Intelligence (SCIoI) and robotic arms from the Robotics and Biology Laboratory (RBO) at TU Berlin.

At the heart of the installation is a novel sensor known as an event camera. Unlike conventional cameras that record full frames at regular intervals, event cameras respond only to changes in brightness at each pixel, producing a constant, jittery stream of “events” that capture motion and contrast in real-time. The result is an image stream that looks more like a living sketch than a photograph.

“Event-based vision is a new tool that opens up exciting new perspectives for robotics, pun intended” laughs Friedhelm Hamann, a PhD candidate working with SCIoI PI Guillermo Gallego on the project “Active tracking using bioinspired event-based vision” at SCIoI. “But it’s also a fundamentally different way of understanding perception, something closer to how biological systems might prioritize change over stasis.”

This dislocation of visual norms was exactly what caught the attention of Alexander Koenig, another PhD researcher from RBO. Alexander had long harbored an interest in artistic practice. When he met Justin Urbach, a media artist exploring sensory enhancement and the limits of vision, the idea for BLINDHÆD began to take shape.

Everything started with a good pinch of curiosity

“We played with the cameras in the lab,” Alexander and Friedhelm recall. “The first time Justin saw how the event data reacted to his movement, how it didn’t show him but the fact that he was moving, he was hooked. It was unlike anything he’d seen.”