Creating a robot that can integrate information from different sources and modalities

©SCIoI

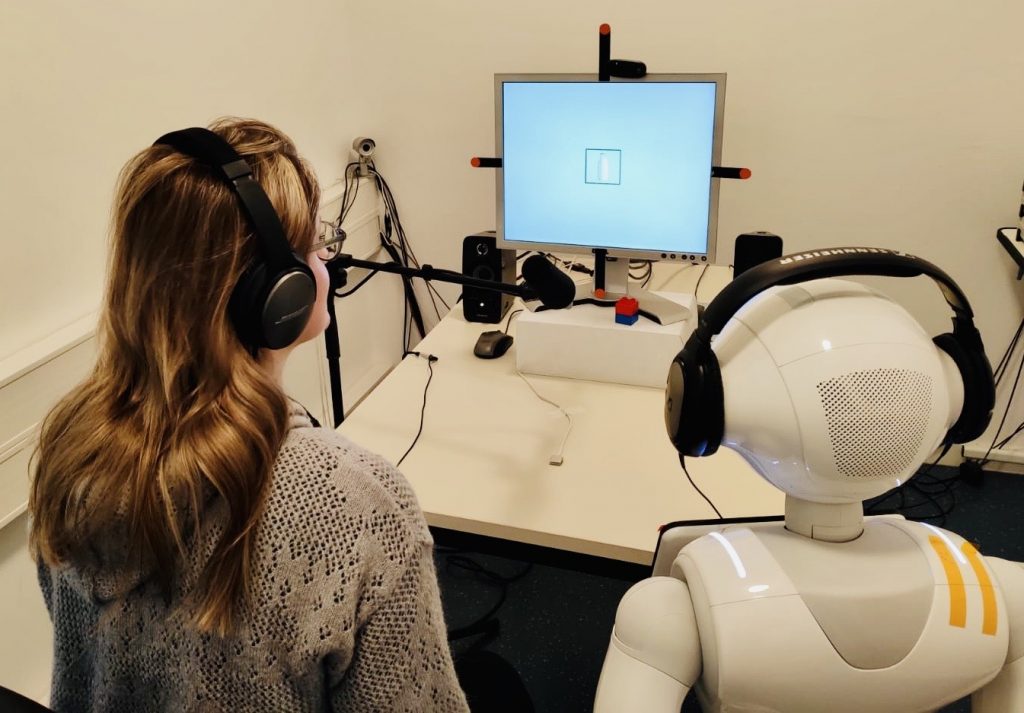

The overall goal of this project is to create a robot that can represent and integrate information from different sources and modalities for successful, task-oriented interactions with other agents. To fully understand the mechanisms of social interaction and communication in humans and to replicate this complex human skill in technological artifacts, we must provide effective means of knowledge transfer between agents. The first step of this project is therefore to describe core components and determinants of communicative behavior including joint attention, partner co-representation, information processing from different modalities and the role of motivation and personal relevance (Kaplan, and Hafner, 2006; Kuhlen & Abdel Rahman, 2017; Kuhlen et al., 2017). We will compare these functions in human-human, human-robot, and robot-robot interactions to identify commonalities and differences. This comparison will also consider the role of different presumed partner attributes (e.g., a robot described as “social” or “intelligent”). We will conduct behavioral, electrophysiological, and fMRI experiments to describe the microstructure of communicative behavior.The second step of the project is to create predictive models for multimodal communication that can account for these psychological findings in humans. Both the prerequisites and factors acting as priors will be identified, and suitable computational models will be developed that can represent multimodal sensory features in an abstract but biologically inspired way (suitable for extracting principles of intelligence; Schillaci et al., 2013). In perspective, the third step of this project is to use these models to generate novel predictions of social behavior in humans. Throughout the project we will focus on the processing of complex multimodal information, a central characteristic of social interactions, that have nevertheless thus far been investigated mostly within modalities. We assume that multimodal information, e.g. from auditory (speech) and visual (face, eye gaze) or tactile (touch) information, will augment the partner co-representation and will therefore improve communicative behavior.

Related Publications +

2756394

proj009

1

apa

50

creator

desc

year

20165

https://www.scienceofintelligence.de/wp-content/plugins/zotpress/

%7B%22status%22%3A%22success%22%2C%22updateneeded%22%3Afalse%2C%22instance%22%3Afalse%2C%22meta%22%3A%7B%22request_last%22%3A0%2C%22request_next%22%3A0%2C%22used_cache%22%3Atrue%7D%2C%22data%22%3A%5B%7B%22key%22%3A%22Y7WHPSLI%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Yun%20et%20al.%22%2C%22parsedDate%22%3A%222022%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EYun%2C%20H.%20S.%2C%20H%26%23xFC%3Bbert%2C%20H.%2C%20Taliaronak%2C%20V.%2C%20Mayet%2C%20R.%2C%20Kirtay%2C%20M.%2C%20Hafner%2C%20V.%20V.%2C%20%26amp%3B%20Pinkwart%2C%20N.%20%282022%29.%20%3Ci%3EAI-based%20Open-Source%20Gesture%20Retargeting%20to%20a%20Humanoid%20Teaching%20Robot%3C%5C%2Fi%3E.%20AIED%202022%3A%20The%2023rd%20International%20Conference%20on%20Artificial%20Intelligence%20in%20Education.%20%3Ca%20class%3D%27zp-ItemURL%27%20href%3D%27https%3A%5C%2F%5C%2Flink.springer.com%5C%2Fchapter%5C%2F10.1007%5C%2F978-3-031-11647-6_51%27%3Ehttps%3A%5C%2F%5C%2Flink.springer.com%5C%2Fchapter%5C%2F10.1007%5C%2F978-3-031-11647-6_51%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22document%22%2C%22title%22%3A%22AI-based%20Open-Source%20Gesture%20Retargeting%20to%20a%20Humanoid%20Teaching%20Robot%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Hae%20Seon%22%2C%22lastName%22%3A%22Yun%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Heiko%22%2C%22lastName%22%3A%22H%5Cu00fcbert%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Volha%22%2C%22lastName%22%3A%22Taliaronak%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Ralf%22%2C%22lastName%22%3A%22Mayet%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Niels%22%2C%22lastName%22%3A%22Pinkwart%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222022%22%2C%22language%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Flink.springer.com%5C%2Fchapter%5C%2F10.1007%5C%2F978-3-031-11647-6_51%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%22AI7HHP8A%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Yun%20et%20al.%22%2C%22parsedDate%22%3A%222022%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EYun%2C%20H.%20S.%2C%20Taliaronak%2C%20V.%2C%20Kirtay%2C%20M.%2C%20Chevel%26%23xE8%3Bre%2C%20J.%2C%20H%26%23xFC%3Bbert%2C%20H.%2C%20Hafner%2C%20V.%20V.%2C%20Pinkwart%2C%20N.%2C%20%26amp%3B%20Lazarides%2C%20R.%20%282022%29.%20%3Ci%3EChallenges%20in%20Designing%20Teacher%20Robots%20with%20Motivation%20Based%20Gestures%3C%5C%2Fi%3E.%2017th%20Annual%20ACM%5C%2FIEEE%20International%20Conference%20on%20Human-Robot%20Interaction%20%28HRI%202022%29.%20%3Ca%20class%3D%27zp-ItemURL%27%20href%3D%27https%3A%5C%2F%5C%2Farxiv.org%5C%2Fabs%5C%2F2302.03942%27%3Ehttps%3A%5C%2F%5C%2Farxiv.org%5C%2Fabs%5C%2F2302.03942%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22document%22%2C%22title%22%3A%22Challenges%20in%20Designing%20Teacher%20Robots%20with%20Motivation%20Based%20Gestures%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Hae%20Seon%22%2C%22lastName%22%3A%22Yun%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Volha%22%2C%22lastName%22%3A%22Taliaronak%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Johann%22%2C%22lastName%22%3A%22Chevel%5Cu00e8re%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Heiko%22%2C%22lastName%22%3A%22H%5Cu00fcbert%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Niels%22%2C%22lastName%22%3A%22Pinkwart%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rebecca%22%2C%22lastName%22%3A%22Lazarides%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222022%22%2C%22language%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Farxiv.org%5C%2Fabs%5C%2F2302.03942%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%22665RWZGG%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Wudarczyk%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EWudarczyk%2C%20O.%20A.%2C%20Kirtay%2C%20M.%2C%20Pischedda%2C%20D.%2C%20Hafner%2C%20V.%20V.%2C%20Haynes%2C%20J.-D.%2C%20Kuhlen%2C%20A.%20K.%2C%20%26amp%3B%20Abdel%20Rahman%2C%20R.%20%282021%29.%20Robots%20facilitate%20human%20language%20production.%20%3Ci%3EScientific%20Reports%3C%5C%2Fi%3E%2C%20%3Ci%3E11%3C%5C%2Fi%3E%281%29%2C%2016737.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1038%5C%2Fs41598-021-95645-9%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1038%5C%2Fs41598-021-95645-9%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Robots%20facilitate%20human%20language%20production%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%20A.%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Doris%22%2C%22lastName%22%3A%22Pischedda%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rasha%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1038%5C%2Fs41598-021-95645-9%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fwww.nature.com%5C%2Farticles%5C%2Fs41598-021-95645-9%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%22LE84LQLK%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Wudarczyk%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EWudarczyk%2C%20O.%20A.%2C%20Kirtay%2C%20M.%2C%20Kuhlen%2C%20A.%20K.%2C%20Abdel%20Rahman%2C%20R.%2C%20Haynes%2C%20J.-D.%2C%20Hafner%2C%20V.%20V.%2C%20%26amp%3B%20Pischedda%2C%20D.%20%282021%29.%20Bringing%20Together%20Robotics%2C%20Neuroscience%2C%20and%20Psychology%3A%20Lessons%20Learned%20From%20an%20Interdisciplinary%20Project.%20%3Ci%3EFrontiers%20in%20Human%20Neuroscience%3C%5C%2Fi%3E%2C%20%3Ci%3E15%3C%5C%2Fi%3E.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.3389%5C%2Ffnhum.2021.630789%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.3389%5C%2Ffnhum.2021.630789%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Bringing%20Together%20Robotics%2C%20Neuroscience%2C%20and%20Psychology%3A%20Lessons%20Learned%20From%20an%20Interdisciplinary%20Project%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%20A.%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rasha%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Doris%22%2C%22lastName%22%3A%22Pischedda%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.3389%5C%2Ffnhum.2021.630789%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fwww.frontiersin.org%5C%2Farticles%5C%2F10.3389%5C%2Ffnhum.2021.630789%5C%2Ffull%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%22KCBXQTW8%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Spatola%20and%20Wudarczyk%22%2C%22parsedDate%22%3A%222020%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ESpatola%2C%20N.%2C%20%26amp%3B%20Wudarczyk%2C%20O.%20A.%20%282020%29.%20Implicit%20Attitudes%20Towards%20Robots%20Predict%20Explicit%20Attitudes%2C%20Semantic%20Distance%20Between%20Robots%20and%20Humans%2C%20Anthropomorphism%2C%20and%20Prosocial%20Behavior%3A%20From%20Attitudes%20to%20Human%26%23x2013%3BRobot%20Interaction.%20%3Ci%3EInternational%20Journal%20of%20Social%20Robotics%3C%5C%2Fi%3E.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1007%5C%2Fs12369-020-00701-5%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1007%5C%2Fs12369-020-00701-5%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Implicit%20Attitudes%20Towards%20Robots%20Predict%20Explicit%20Attitudes%2C%20Semantic%20Distance%20Between%20Robots%20and%20Humans%2C%20Anthropomorphism%2C%20and%20Prosocial%20Behavior%3A%20From%20Attitudes%20to%20Human%5Cu2013Robot%20Interaction%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Nicolas%22%2C%22lastName%22%3A%22Spatola%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%20A.%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222020%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1007%5C%2Fs12369-020-00701-5%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Flink.springer.com%5C%2Farticle%5C%2F10.1007%5C%2Fs12369-020-00701-5%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%22AP4WSRIL%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Spatola%20and%20Wudarczyk%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ESpatola%2C%20N.%2C%20%26amp%3B%20Wudarczyk%2C%20O.%20%282021%29.%20Ascribing%20emotions%20to%20robots%3A%20Explicit%20and%20implicit%20attribution%20of%20emotions%20and%20perceived%20robot%20anthropomorphism.%20%3Ci%3EComputers%20in%20Human%20Behavior%3C%5C%2Fi%3E%2C%20%3Ci%3E124%3C%5C%2Fi%3E%2C%20106934.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.chb.2021.106934%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.chb.2021.106934%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Ascribing%20emotions%20to%20robots%3A%20Explicit%20and%20implicit%20attribution%20of%20emotions%20and%20perceived%20robot%20anthropomorphism%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Nicolas%22%2C%22lastName%22%3A%22Spatola%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1016%5C%2Fj.chb.2021.106934%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fwww.sciencedirect.com%5C%2Fscience%5C%2Farticle%5C%2Fabs%5C%2Fpii%5C%2FS0747563221002570%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%22DNNFHN38%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Pischedda%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EPischedda%2C%20D.%2C%20Lange%2C%20A.%2C%20Kirtay%2C%20M.%2C%20Wudarczyk%2C%20O.%20A.%2C%20Abdel%20Rahman%2C%20R.%2C%20Hafner%2C%20V.%20V.%2C%20Kuhlen%2C%20A.%20K.%2C%20%26amp%3B%20Haynes%2C%20J.-D.%20%282021%29.%20%3Ci%3EAm%20I%20speaking%20to%20a%20human%2C%20a%20robot%2C%20or%20a%20computer%3F%20Neural%20representations%20of%20task%20partners%20in%20communicative%20interactions%20with%20humans%20or%20artificial%20agents%3C%5C%2Fi%3E.%20Neuroscience%202021.%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22document%22%2C%22title%22%3A%22Am%20I%20speaking%20to%20a%20human%2C%20a%20robot%2C%20or%20a%20computer%3F%20Neural%20representations%20of%20task%20partners%20in%20communicative%20interactions%20with%20humans%20or%20artificial%20agents%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Doris%22%2C%22lastName%22%3A%22Pischedda%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%22%2C%22lastName%22%3A%22Lange%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%20A.%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rasha%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22language%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%22WUH4JPWT%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Pischedda%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EPischedda%2C%20D.%2C%20Lange%2C%20A.%2C%20Kirtay%2C%20M.%2C%20Wudarczyk%2C%20O.%20A.%2C%20Abdel%20Rahman%2C%20R.%2C%20Hafner%2C%20V.%20V.%2C%20Kuhlen%2C%20A.%20K.%2C%20%26amp%3B%20Haynes%2C%20J.-D.%20%282021%29.%20%3Ci%3EWho%20is%20my%20interlocutor%3F%20Partner-specific%20neural%20representations%20during%20communicative%20interactions%20with%20human%20or%20artificial%20task%20partners.%3C%5C%2Fi%3E%205th%20Virtual%20Social%20Interactions%20%28VSI%29%20Conference.%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22document%22%2C%22title%22%3A%22Who%20is%20my%20interlocutor%3F%20Partner-specific%20neural%20representations%20during%20communicative%20interactions%20with%20human%20or%20artificial%20task%20partners.%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Doris%22%2C%22lastName%22%3A%22Pischedda%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%22%2C%22lastName%22%3A%22Lange%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%20A.%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rasha%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22language%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%22ZQJS5WJZ%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Pischedda%20et%20al.%22%2C%22parsedDate%22%3A%222023%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EPischedda%2C%20D.%2C%20Erener%2C%20S.%2C%20Kuhlen%2C%20A.%2C%20%26amp%3B%20Haynes%2C%20J.-D.%20%282023%29.%20%3Ci%3EHow%20do%20people%20discriminate%20conversations%20generated%20by%20humans%20and%20artificial%20intelligence%3F%20The%20role%20of%20individual%20variability%20on%20people%26%23x2019%3Bs%20judgment%3C%5C%2Fi%3E.%20ESCOP%202023.%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22document%22%2C%22title%22%3A%22How%20do%20people%20discriminate%20conversations%20generated%20by%20humans%20and%20artificial%20intelligence%3F%20The%20role%20of%20individual%20variability%20on%20people%27s%20judgment%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Doris%22%2C%22lastName%22%3A%22Pischedda%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Safak%22%2C%22lastName%22%3A%22Erener%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222023%22%2C%22language%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A29Z%22%7D%7D%2C%7B%22key%22%3A%22P8SYCETK%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Pischedda%20et%20al.%22%2C%22parsedDate%22%3A%222023%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EPischedda%2C%20D.%2C%20Kaufmann%2C%20V.%2C%20Wudarczyk%2C%20O.%2C%20Abdel%20Rahman%2C%20R.%2C%20Hafner%2C%20V.%20V.%2C%20Kuhlen%2C%20A.%2C%20%26amp%3B%20Haynes%2C%20J.-D.%20%282023%29.%20Human%20or%20AI%3F%20The%20brain%20knows%20it%21%20A%20brain-based%20Turing%20Test%20to%20discriminate%20between%20human%20and%20artificial%20agents.%20%3Ci%3ERO-MAN%202023%3C%5C%2Fi%3E.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FRO-MAN57019.2023.10309541%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FRO-MAN57019.2023.10309541%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Human%20or%20AI%3F%20The%20brain%20knows%20it%21%20A%20brain-based%20Turing%20Test%20to%20discriminate%20between%20human%20and%20artificial%20agents.%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Doris%22%2C%22lastName%22%3A%22Pischedda%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Vanessa%22%2C%22lastName%22%3A%22Kaufmann%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rasha%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20Vanessa%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222023%22%2C%22proceedingsTitle%22%3A%22RO-MAN%202023%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FRO-MAN57019.2023.10309541%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fieeexplore.ieee.org%5C%2Fdocument%5C%2F10309541%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A29Z%22%7D%7D%2C%7B%22key%22%3A%222KGRW7EU%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222020%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Wudarczyk%2C%20O.%20A.%2C%20Pischedda%2C%20D.%2C%20Kuhlen%2C%20A.%20K.%2C%20Abdel%20Rahman%2C%20R.%2C%20Haynes%2C%20J.-D.%2C%20%26amp%3B%20Hafner%2C%20V.%20V.%20%282020%29.%20Modeling%20robot%20co-representation%3A%20state-of-the-art%2C%20open%20issues%2C%20and%20predictive%20learning%20as%20a%20possible%20framework.%20%3Ci%3E2020%20Joint%20IEEE%2010th%20International%20Conference%20on%20Development%20and%20Learning%20and%20Epigenetic%20Robotics%20%28ICDL-EpiRob%29%3C%5C%2Fi%3E%2C%201%26%23x2013%3B8.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL-EpiRob48136.2020.9278031%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL-EpiRob48136.2020.9278031%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Modeling%20robot%20co-representation%3A%20state-of-the-art%2C%20open%20issues%2C%20and%20predictive%20learning%20as%20a%20possible%20framework%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22M.%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22O.%20A.%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22D.%22%2C%22lastName%22%3A%22Pischedda%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22A.%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22R.%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22J.-D.%22%2C%22lastName%22%3A%22Haynes%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22V.%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222020%22%2C%22proceedingsTitle%22%3A%222020%20Joint%20IEEE%2010th%20International%20Conference%20on%20Development%20and%20Learning%20and%20Epigenetic%20Robotics%20%28ICDL-EpiRob%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FICDL-EpiRob48136.2020.9278031%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fieeexplore.ieee.org%5C%2Fdocument%5C%2F9278031%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%2289URM8C2%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Cheval%26%23xE8%3Bre%2C%20J.%2C%20Lazarides%2C%20R.%2C%20%26amp%3B%20Hafner%2C%20V.%20V.%20%282021%29.%20Learning%20in%20Social%20Interaction%3A%20Perspectives%20from%20Psychology%20and%20Robotics.%20%3Ci%3E2021%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%3C%5C%2Fi%3E%2C%201%26%23x2013%3B8.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL49984.2021.9515648%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL49984.2021.9515648%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Learning%20in%20Social%20Interaction%3A%20Perspectives%20from%20Psychology%20and%20Robotics%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Johann%22%2C%22lastName%22%3A%22Cheval%5Cu00e8re%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rebecca%22%2C%22lastName%22%3A%22Lazarides%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22proceedingsTitle%22%3A%222021%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FICDL49984.2021.9515648%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fieeexplore.ieee.org%5C%2Fdocument%5C%2F9515648%5C%2F%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%22U5WLEVGE%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Oztop%2C%20E.%2C%20Asada%2C%20M.%2C%20%26amp%3B%20Hafner%2C%20V.%20V.%20%282021%29.%20Modeling%20robot%20trust%20based%20on%20emergent%20emotion%20in%20an%20interactive%20task.%20%3Ci%3E2021%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%3C%5C%2Fi%3E%2C%201%26%23x2013%3B8.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL49984.2021.9515645%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL49984.2021.9515645%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Modeling%20robot%20trust%20based%20on%20emergent%20emotion%20in%20an%20interactive%20task%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Erhan%22%2C%22lastName%22%3A%22Oztop%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Minoru%22%2C%22lastName%22%3A%22Asada%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22proceedingsTitle%22%3A%222021%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FICDL49984.2021.9515645%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fieeexplore.ieee.org%5C%2Fdocument%5C%2F9515645%5C%2F%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%229T8IJ8FU%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Oztop%2C%20E.%2C%20Asada%2C%20M.%2C%20%26amp%3B%20Hafner%2C%20V.%20V.%20%282021%29.%20Trust%20me%21%20I%20am%20a%20robot%3A%20an%20affective%20computational%20account%20of%20scaffolding%20in%20robot-robot%20interaction.%20%3Ci%3E2021%2030th%20IEEE%20International%20Conference%20on%20Robot%20%26amp%3B%20Human%20Interactive%20Communication%20%28RO-MAN%29%3C%5C%2Fi%3E%2C%20189%26%23x2013%3B196.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FRO-MAN50785.2021.9515494%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FRO-MAN50785.2021.9515494%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Trust%20me%21%20I%20am%20a%20robot%3A%20an%20affective%20computational%20account%20of%20scaffolding%20in%20robot-robot%20interaction%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Erhan%22%2C%22lastName%22%3A%22Oztop%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Minoru%22%2C%22lastName%22%3A%22Asada%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222021%22%2C%22proceedingsTitle%22%3A%222021%2030th%20IEEE%20International%20Conference%20on%20Robot%20%26%20Human%20Interactive%20Communication%20%28RO-MAN%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FRO-MAN50785.2021.9515494%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fieeexplore.ieee.org%5C%2Fdocument%5C%2F9515494%5C%2F%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A36Z%22%7D%7D%2C%7B%22key%22%3A%22M8XYDNX4%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222022%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Oztop%2C%20E.%2C%20Kuhlen%2C%20A.%20K.%2C%20Asada%2C%20M.%2C%20%26amp%3B%20Hafner%2C%20V.%20V.%20%282022%29.%20Forming%20robot%20trust%20in%20heterogeneous%20agents%20during%20a%20multimodal%20interactive%20game.%20%3Ci%3E2022%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%3C%5C%2Fi%3E%2C%20307%26%23x2013%3B313.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL53763.2022.9962212%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL53763.2022.9962212%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Forming%20robot%20trust%20in%20heterogeneous%20agents%20during%20a%20multimodal%20interactive%20game%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Erhan%22%2C%22lastName%22%3A%22Oztop%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Minoru%22%2C%22lastName%22%3A%22Asada%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222022%22%2C%22proceedingsTitle%22%3A%222022%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FICDL53763.2022.9962212%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%22INT88S73%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222022%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Oztop%2C%20E.%2C%20Kuhlen%2C%20A.%20K.%2C%20Asada%2C%20M.%2C%20%26amp%3B%20Hafner%2C%20V.%20V.%20%282022%29.%20Trustworthiness%20assessment%20in%20multimodal%20human-robot%20interaction%20based%20on%20cognitive%20load.%20%3Ci%3E2022%2031st%20IEEE%20International%20Conference%20on%20Robot%20and%20Human%20Interactive%20Communication%20%28RO-MAN%29%3C%5C%2Fi%3E%2C%20469%26%23x2013%3B476.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FRO-MAN53752.2022.9900730%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FRO-MAN53752.2022.9900730%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Trustworthiness%20assessment%20in%20multimodal%20human-robot%20interaction%20based%20on%20cognitive%20load%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Erhan%22%2C%22lastName%22%3A%22Oztop%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%20K.%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Minoru%22%2C%22lastName%22%3A%22Asada%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222022%22%2C%22proceedingsTitle%22%3A%222022%2031st%20IEEE%20International%20Conference%20on%20Robot%20and%20Human%20Interactive%20Communication%20%28RO-MAN%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FRO-MAN53752.2022.9900730%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%2C%7B%22key%22%3A%2286I79R74%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222023%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Hafner%2C%20V.%20V.%2C%20Asada%2C%20M.%2C%20%26amp%3B%20Oztop%2C%20E.%20%282023%29.%20Interplay%20Between%20Neural%20Computational%20Energy%20and%20Multimodal%20Processing%20in%20Robot-Robot%20Interaction.%20%3Ci%3E2023%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%3C%5C%2Fi%3E%2C%2015%26%23x2013%3B21.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL55364.2023.10364527%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FICDL55364.2023.10364527%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Interplay%20Between%20Neural%20Computational%20Energy%20and%20Multimodal%20Processing%20in%20Robot-Robot%20Interaction%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Minoru%22%2C%22lastName%22%3A%22Asada%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Erhan%22%2C%22lastName%22%3A%22Oztop%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222023%22%2C%22proceedingsTitle%22%3A%222023%20IEEE%20International%20Conference%20on%20Development%20and%20Learning%20%28ICDL%29%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FICDL55364.2023.10364527%22%2C%22ISBN%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A29Z%22%7D%7D%2C%7B%22key%22%3A%22QG6X4VFJ%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kirtay%20et%20al.%22%2C%22parsedDate%22%3A%222023%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKirtay%2C%20M.%2C%20Hafner%2C%20V.%20V.%2C%20Asada%2C%20M.%2C%20%26amp%3B%20Oztop%2C%20E.%20%282023%29.%20Trust%20in%20robot-robot%20scaffolding.%20%3Ci%3EIEEE%20Transactions%20on%20Cognitive%20and%20Developmental%20Systems%3C%5C%2Fi%3E.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FTCDS.2023.3235974%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1109%5C%2FTCDS.2023.3235974%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Trust%20in%20robot-robot%20scaffolding%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20V.%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Minoru%22%2C%22lastName%22%3A%22Asada%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Erhan%22%2C%22lastName%22%3A%22Oztop%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222023%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1109%5C%2FTCDS.2023.3235974%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A29Z%22%7D%7D%2C%7B%22key%22%3A%22JKJMXAXV%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Eiserbeck%20et%20al.%22%2C%22parsedDate%22%3A%222024%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EEiserbeck%2C%20A.%2C%20Wudarczyk%2C%20O.%2C%20Kuhlen%2C%20A.%2C%20Hafner%2C%20V.%20V.%2C%20Haynes%2C%20J.-D.%2C%20%26amp%3B%20Abdel%20Rahman%2C%20R.%20%282024%29.%20%3Ci%3ECommunicative%20context%20enhances%20emotional%20word%20processing%20with%20human%20speakers%20but%20not%20with%20robots%3C%5C%2Fi%3E.%20ASSC27.%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22document%22%2C%22title%22%3A%22Communicative%20context%20enhances%20emotional%20word%20processing%20with%20human%20speakers%20but%20not%20with%20robots%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%22%2C%22lastName%22%3A%22Eiserbeck%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Olga%22%2C%22lastName%22%3A%22Wudarczyk%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anna%22%2C%22lastName%22%3A%22Kuhlen%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%20Vanessa%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John-Dylan%22%2C%22lastName%22%3A%22Haynes%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rasha%22%2C%22lastName%22%3A%22Abdel%20Rahman%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222024%22%2C%22language%22%3A%22%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A29Z%22%7D%7D%2C%7B%22key%22%3A%22ERXS36TA%22%2C%22library%22%3A%7B%22id%22%3A2756394%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Cheval%5Cu00e8re%20et%20al.%22%2C%22parsedDate%22%3A%222022%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ECheval%26%23xE8%3Bre%2C%20J.%2C%20Kirtay%2C%20M.%2C%20Hafner%2C%20V.%2C%20%26amp%3B%20Lazarides%2C%20R.%20%282022%29.%20Who%20to%20Observe%20and%20Imitate%20in%20Humans%20and%20Robots%3A%20The%20Importance%20of%20Motivational%20Factors.%20%3Ci%3EInternational%20Journal%20of%20Social%20Robotics%3C%5C%2Fi%3E.%20%3Ca%20class%3D%27zp-DOIURL%27%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1007%5C%2Fs12369-022-00923-9%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1007%5C%2Fs12369-022-00923-9%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Who%20to%20Observe%20and%20Imitate%20in%20Humans%20and%20Robots%3A%20The%20Importance%20of%20Motivational%20Factors%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Johann%22%2C%22lastName%22%3A%22Cheval%5Cu00e8re%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Murat%22%2C%22lastName%22%3A%22Kirtay%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Verena%22%2C%22lastName%22%3A%22Hafner%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rebecca%22%2C%22lastName%22%3A%22Lazarides%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222022%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1007%5C%2Fs12369-022-00923-9%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Flink.springer.com%5C%2Fcontent%5C%2Fpdf%5C%2F10.1007%5C%2Fs12369-022-00923-9.pdf%22%2C%22collections%22%3A%5B%5D%2C%22dateModified%22%3A%222025-05-30T15%3A00%3A34Z%22%7D%7D%5D%7D

Yun, H. S., Hübert, H., Taliaronak, V., Mayet, R., Kirtay, M., Hafner, V. V., & Pinkwart, N. (2022).

AI-based Open-Source Gesture Retargeting to a Humanoid Teaching Robot. AIED 2022: The 23rd International Conference on Artificial Intelligence in Education.

https://link.springer.com/chapter/10.1007/978-3-031-11647-6_51

Yun, H. S., Taliaronak, V., Kirtay, M., Chevelère, J., Hübert, H., Hafner, V. V., Pinkwart, N., & Lazarides, R. (2022).

Challenges in Designing Teacher Robots with Motivation Based Gestures. 17th Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI 2022).

https://arxiv.org/abs/2302.03942

Wudarczyk, O. A., Kirtay, M., Pischedda, D., Hafner, V. V., Haynes, J.-D., Kuhlen, A. K., & Abdel Rahman, R. (2021). Robots facilitate human language production.

Scientific Reports,

11(1), 16737.

https://doi.org/10.1038/s41598-021-95645-9

Wudarczyk, O. A., Kirtay, M., Kuhlen, A. K., Abdel Rahman, R., Haynes, J.-D., Hafner, V. V., & Pischedda, D. (2021). Bringing Together Robotics, Neuroscience, and Psychology: Lessons Learned From an Interdisciplinary Project.

Frontiers in Human Neuroscience,

15.

https://doi.org/10.3389/fnhum.2021.630789

Spatola, N., & Wudarczyk, O. A. (2020). Implicit Attitudes Towards Robots Predict Explicit Attitudes, Semantic Distance Between Robots and Humans, Anthropomorphism, and Prosocial Behavior: From Attitudes to Human–Robot Interaction.

International Journal of Social Robotics.

https://doi.org/10.1007/s12369-020-00701-5

Spatola, N., & Wudarczyk, O. (2021). Ascribing emotions to robots: Explicit and implicit attribution of emotions and perceived robot anthropomorphism.

Computers in Human Behavior,

124, 106934.

https://doi.org/10.1016/j.chb.2021.106934

Pischedda, D., Lange, A., Kirtay, M., Wudarczyk, O. A., Abdel Rahman, R., Hafner, V. V., Kuhlen, A. K., & Haynes, J.-D. (2021). Am I speaking to a human, a robot, or a computer? Neural representations of task partners in communicative interactions with humans or artificial agents. Neuroscience 2021.

Pischedda, D., Lange, A., Kirtay, M., Wudarczyk, O. A., Abdel Rahman, R., Hafner, V. V., Kuhlen, A. K., & Haynes, J.-D. (2021). Who is my interlocutor? Partner-specific neural representations during communicative interactions with human or artificial task partners. 5th Virtual Social Interactions (VSI) Conference.

Pischedda, D., Erener, S., Kuhlen, A., & Haynes, J.-D. (2023). How do people discriminate conversations generated by humans and artificial intelligence? The role of individual variability on people’s judgment. ESCOP 2023.

Pischedda, D., Kaufmann, V., Wudarczyk, O., Abdel Rahman, R., Hafner, V. V., Kuhlen, A., & Haynes, J.-D. (2023). Human or AI? The brain knows it! A brain-based Turing Test to discriminate between human and artificial agents.

RO-MAN 2023.

https://doi.org/10.1109/RO-MAN57019.2023.10309541

Kirtay, M., Wudarczyk, O. A., Pischedda, D., Kuhlen, A. K., Abdel Rahman, R., Haynes, J.-D., & Hafner, V. V. (2020). Modeling robot co-representation: state-of-the-art, open issues, and predictive learning as a possible framework.

2020 Joint IEEE 10th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), 1–8.

https://doi.org/10.1109/ICDL-EpiRob48136.2020.9278031

Kirtay, M., Chevalère, J., Lazarides, R., & Hafner, V. V. (2021). Learning in Social Interaction: Perspectives from Psychology and Robotics.

2021 IEEE International Conference on Development and Learning (ICDL), 1–8.

https://doi.org/10.1109/ICDL49984.2021.9515648

Kirtay, M., Oztop, E., Asada, M., & Hafner, V. V. (2021). Modeling robot trust based on emergent emotion in an interactive task.

2021 IEEE International Conference on Development and Learning (ICDL), 1–8.

https://doi.org/10.1109/ICDL49984.2021.9515645

Kirtay, M., Oztop, E., Asada, M., & Hafner, V. V. (2021). Trust me! I am a robot: an affective computational account of scaffolding in robot-robot interaction.

2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), 189–196.

https://doi.org/10.1109/RO-MAN50785.2021.9515494

Kirtay, M., Oztop, E., Kuhlen, A. K., Asada, M., & Hafner, V. V. (2022). Forming robot trust in heterogeneous agents during a multimodal interactive game.

2022 IEEE International Conference on Development and Learning (ICDL), 307–313.

https://doi.org/10.1109/ICDL53763.2022.9962212

Kirtay, M., Oztop, E., Kuhlen, A. K., Asada, M., & Hafner, V. V. (2022). Trustworthiness assessment in multimodal human-robot interaction based on cognitive load.

2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 469–476.

https://doi.org/10.1109/RO-MAN53752.2022.9900730

Kirtay, M., Hafner, V. V., Asada, M., & Oztop, E. (2023). Interplay Between Neural Computational Energy and Multimodal Processing in Robot-Robot Interaction.

2023 IEEE International Conference on Development and Learning (ICDL), 15–21.

https://doi.org/10.1109/ICDL55364.2023.10364527

Kirtay, M., Hafner, V. V., Asada, M., & Oztop, E. (2023). Trust in robot-robot scaffolding.

IEEE Transactions on Cognitive and Developmental Systems.

https://doi.org/10.1109/TCDS.2023.3235974

Eiserbeck, A., Wudarczyk, O., Kuhlen, A., Hafner, V. V., Haynes, J.-D., & Abdel Rahman, R. (2024). Communicative context enhances emotional word processing with human speakers but not with robots. ASSC27.

Chevalère, J., Kirtay, M., Hafner, V., & Lazarides, R. (2022). Who to Observe and Imitate in Humans and Robots: The Importance of Motivational Factors.

International Journal of Social Robotics.

https://doi.org/10.1007/s12369-022-00923-9