Between gestures and robots: Jonas Frenkel on nonverbal cues and social intelligence

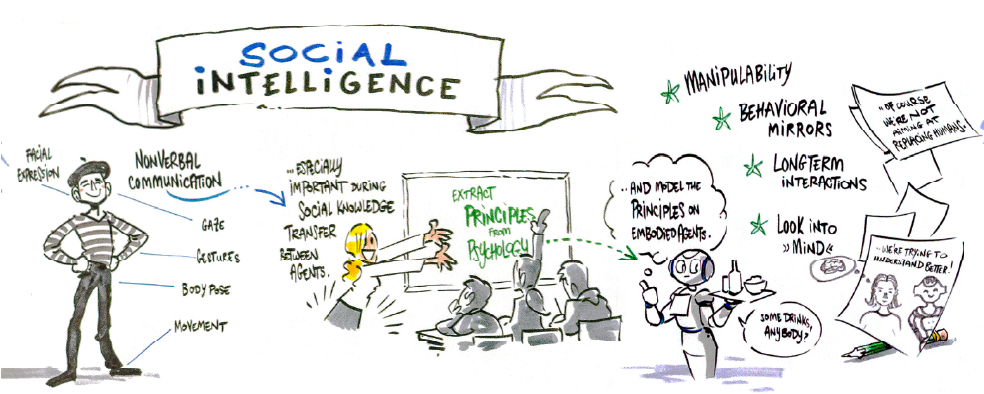

What makes a student lean forward in interest, or tune out completely? Cognitive Scientist Jonas Frenkel believes the answer lies not only in words, but rather in the choreography of gestures, gaze, and posture. At Science of Intelligence (SCIoI), he studies these invisible cues, using computational tools to find out how they shape social learning and what they could reveal about the principles of human intelligence.

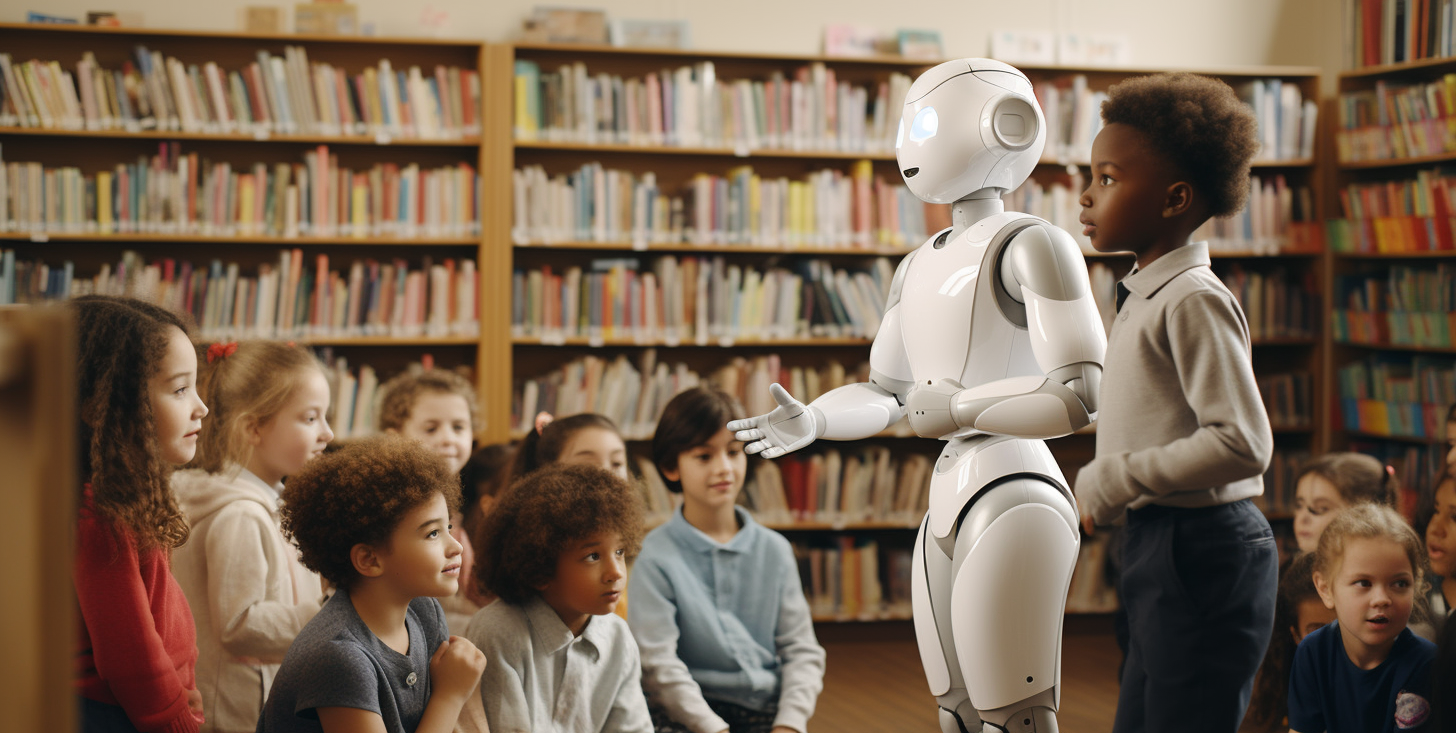

By framing these subtle exchanges within a larger effort to understand social intelligence, SCIoI’s researchers connect the classroom to a broader question: how do agents, whether human or artificial, share knowledge in ways that go beyond words? The same signals that guide a child through a puzzle or a student through a lesson also point to the deeper principles of how intelligence itself is transferred, accumulated, and scaled in social contexts.

In research terms, these gestures, gazes, and postures are forms of nonverbal communication, the focus of Jonas’ work. “Nonverbal communication is everywhere in teaching,” he says. “A glance, a nod, even how close a teacher stands, these things shape how students feel and how much they learn. Yet they’re so automatic we barely notice them, until they’re missing.”

Spotting the signals behind social learning

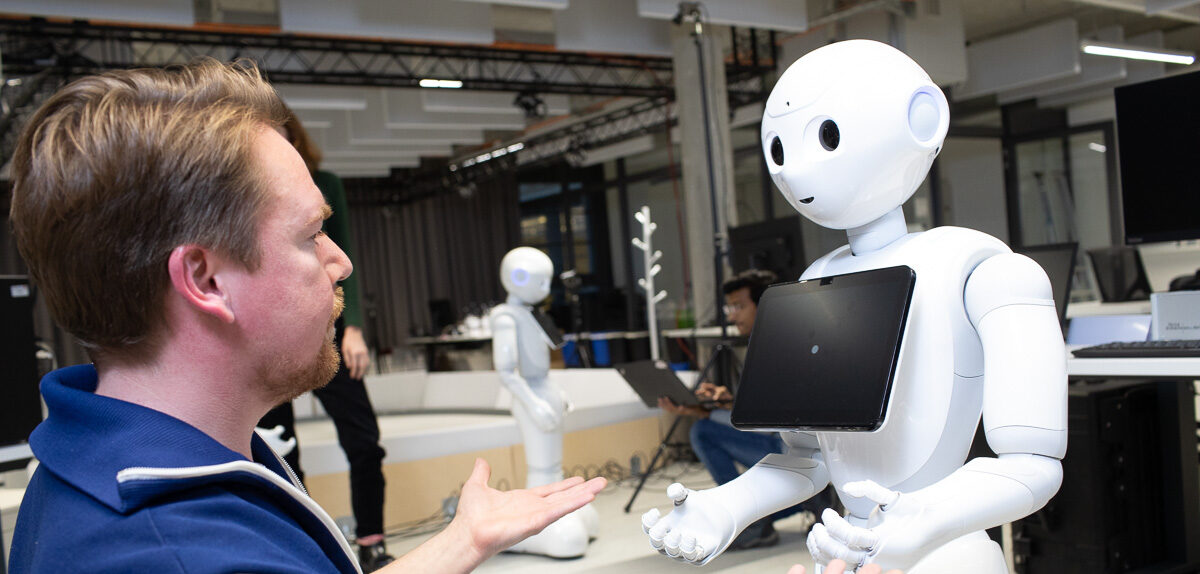

Humans, very much like animals, don’t have to discover everything themselves from scratch. Much of our intelligence comes from social learning. We watch, imitate, and interpret others’ behaviors, and nonverbal communication plays a central role in this process. In his project, Jonas investigates how algorithms can help us better understand the nonverbal signals that drive social learning. With his colleagues, he analyzes hours of classroom footage, breaking down interactions into fragments of gestures, gazes, and expressions, and trains computer vision models to spot them.

“In psychology, we usually code behavior manually, often in 20-minute stretches, and it’s incredibly time-consuming,” he explains. “An algorithm, on the other hand, has to learn frame by frame what matters. Humans make these judgments instantly. Machines don’t.”

The lab at University of Potsdam where Jonas works (SCIoI PI Rebecca Lazarides) uses methods such as eyetracking and electrodermal activity (EDA) to measure how students respond to different teaching styles, for instance when a teacher maintains eye contact instead of avoiding it, uses expressive gestures instead standing still, or moves closer to the students instead of keeping more distance. But the point isn’t simply to build a machine that “sees” like a human. Rather, it’s a way of testing theories: Are our assumptions about how people pick up on these subtle signals actually backed up? If the model succeeds, it supports the theory. If it fails, it shows that our understanding is incomplete. “In this way, the model becomes our scientific probe, helping us refine our theories and, ultimately, understand the principles of social intelligence itself,” says Jonas.