Silicon retinas help robots navigate the world

For a robot to navigate the real world, it needs to perceive the 3D structure of the scene while in motion and continuously estimate the depth of its surroundings. Humans do this effortlessly with stereoscopic vision — the brain’s ability to register a sense of 3D shape and form from visual inputs.

The brain uses the disparity between the views observed by each eye to figure out how far away something is. This requires knowing how each physical point in the scene looks from different perspectives. For robots, this is difficult to determine from raw pixel data obtained with cameras.

To address this problem of depth estimation in robots, our researchers Suman Ghosh and Guillermo Gallego fuse data from multiple moving cameras in order to generate a 3D map. In a recently published study in the journal Advanced Intelligent Systems, they reported a means of bringing together spike-based data from multiple moving cameras to generate a coherent 3D map. “Every time the pixel of an event camera generates data, we can use the known camera motion to trace the path of the light ray that triggered it,” said Gallego. “Since the events are generated from the apparent motion of the same 3D structure in the scene, the points where the rays meet give us cues about the location of the 3D points in space.”

Read more about this interesting study here.

Reference: Suman Ghosh and Guillermo Gallego, Multi-Event-Camera Depth Estimation and Outlier Rejection by Refocused Events Fusion, Advanced Intelligent Systems (2022). DOI:10.1002/aisy.202200221

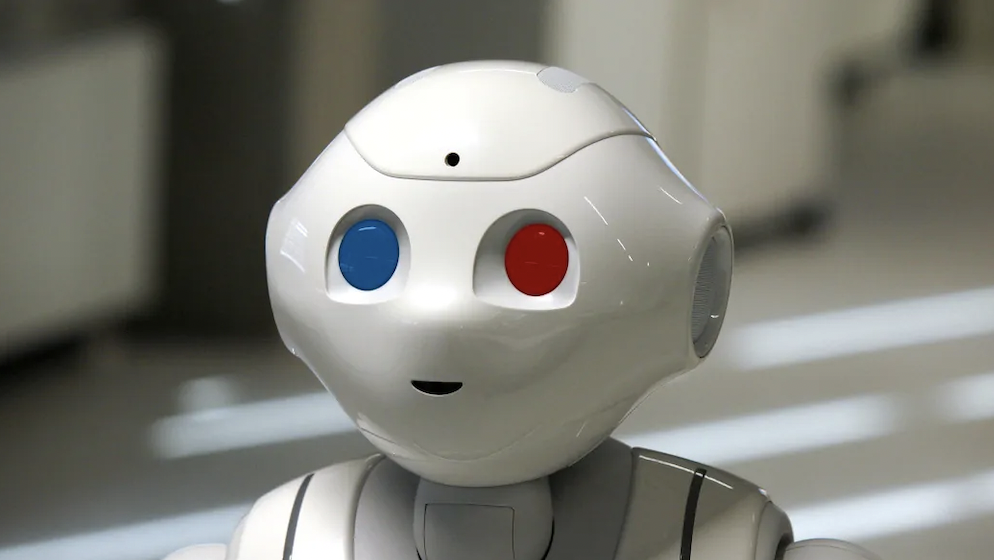

Feature image: A robot using stereoscopic vision at the Science of Intelligence Excellence Cluster. Credit: Guillermo Gallego